Best AI Content Detection Tools for ChatGPT, GPT-3 and more [Tested]

Regardless of your opinion on generative AI content, one thing is clear:

AI technology has taken the internet and our lives by storm. Jasper, ChatGPT, Bing, art generators like Midjourney or Stable Diffusion. They are everywhere now.

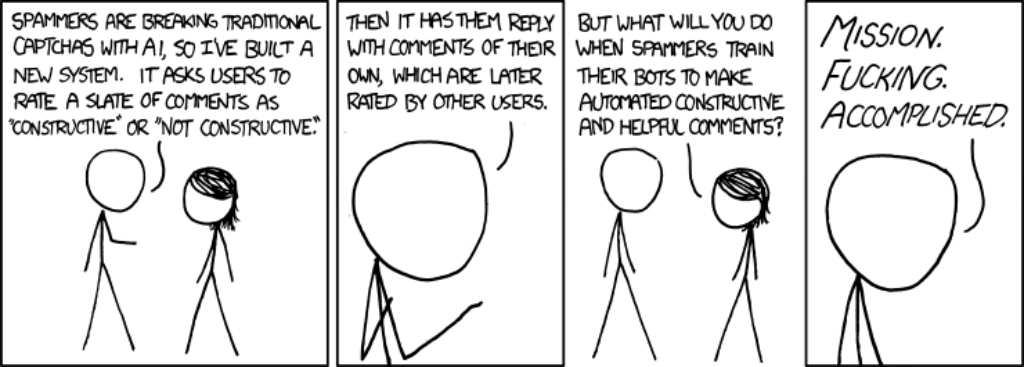

Recently, I saw this post on Facebook that caught my attention:

New markets emerge, new opportunities arise. And new problems too! And the most obvious question about AI writing is this:

How to detect AI generated content and ChatGPT in particular?

In this article, I’ll show you how not to get fooled by a sneaky student or a lazy content writer who uses AI to generate text.

I’ll cover the methods that you can use to check if something was written by AI and how this detection works in general (feel free to skip sections you’re not interested in).

And if you’re that student or a content writer who wants to avoid being detected, keep reading to the end, where I’ll show you how you can bypass AI content detection and write better using AI writing software in good faith.

In a rush? Here are the best AI generated content detection tools:

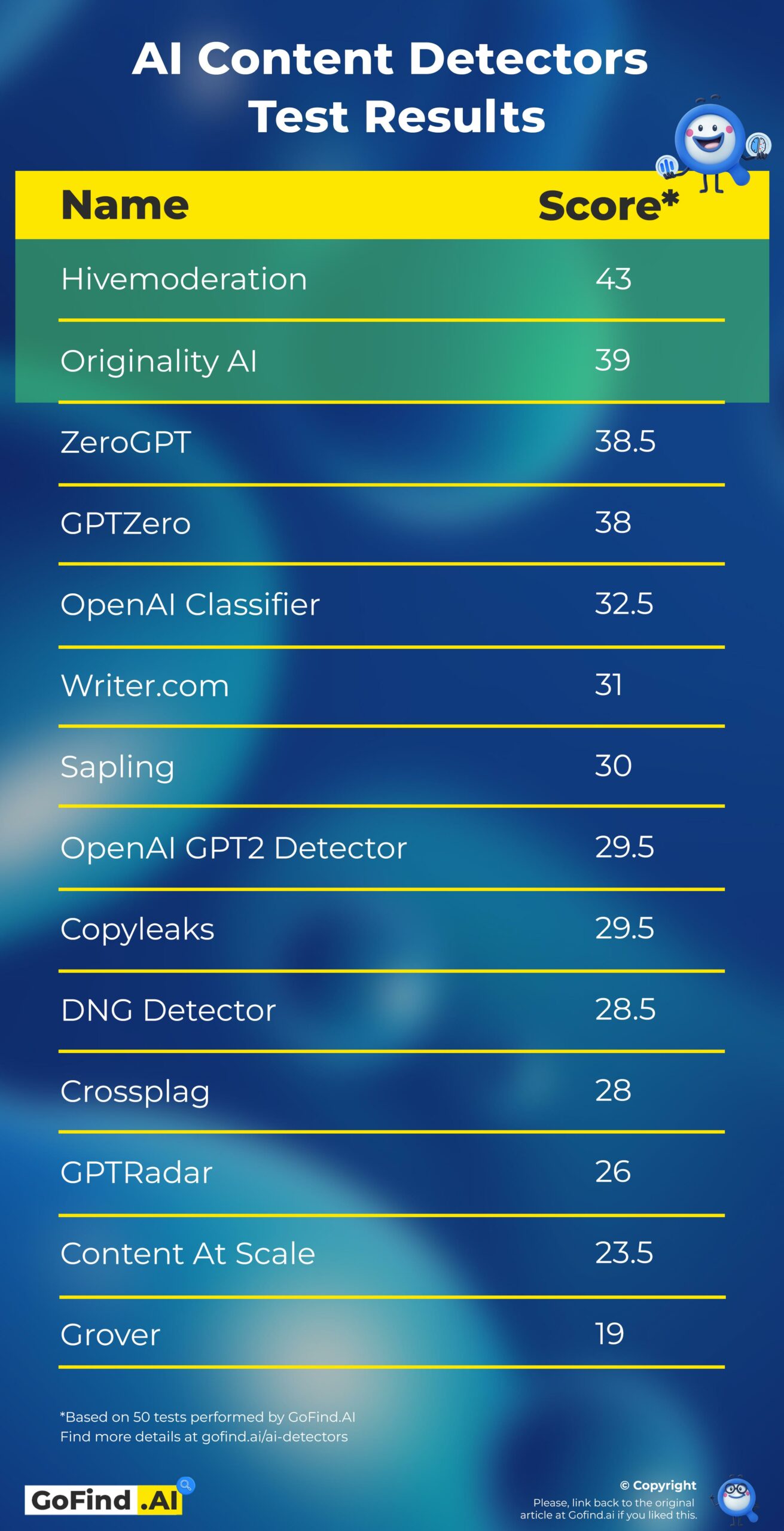

- I did 50 tests to determine the best AI content detector on the market right now. (Feb, 2023)

- Hivemoderation showed the best results and scored 43 points out of 50, with only 7 failed tests.

- Most AI detectors require bigger chunks of texts for detection, and can’t detect shorter ones.

- No AI content detector is 100% reliable. Do not make business or other important decisions based on the AI tool verdict.

- OpenAI AI Text Classifier is accurate about 70% of the time, with 30% false positives in tests with human written texts.

How does AI content detection work?

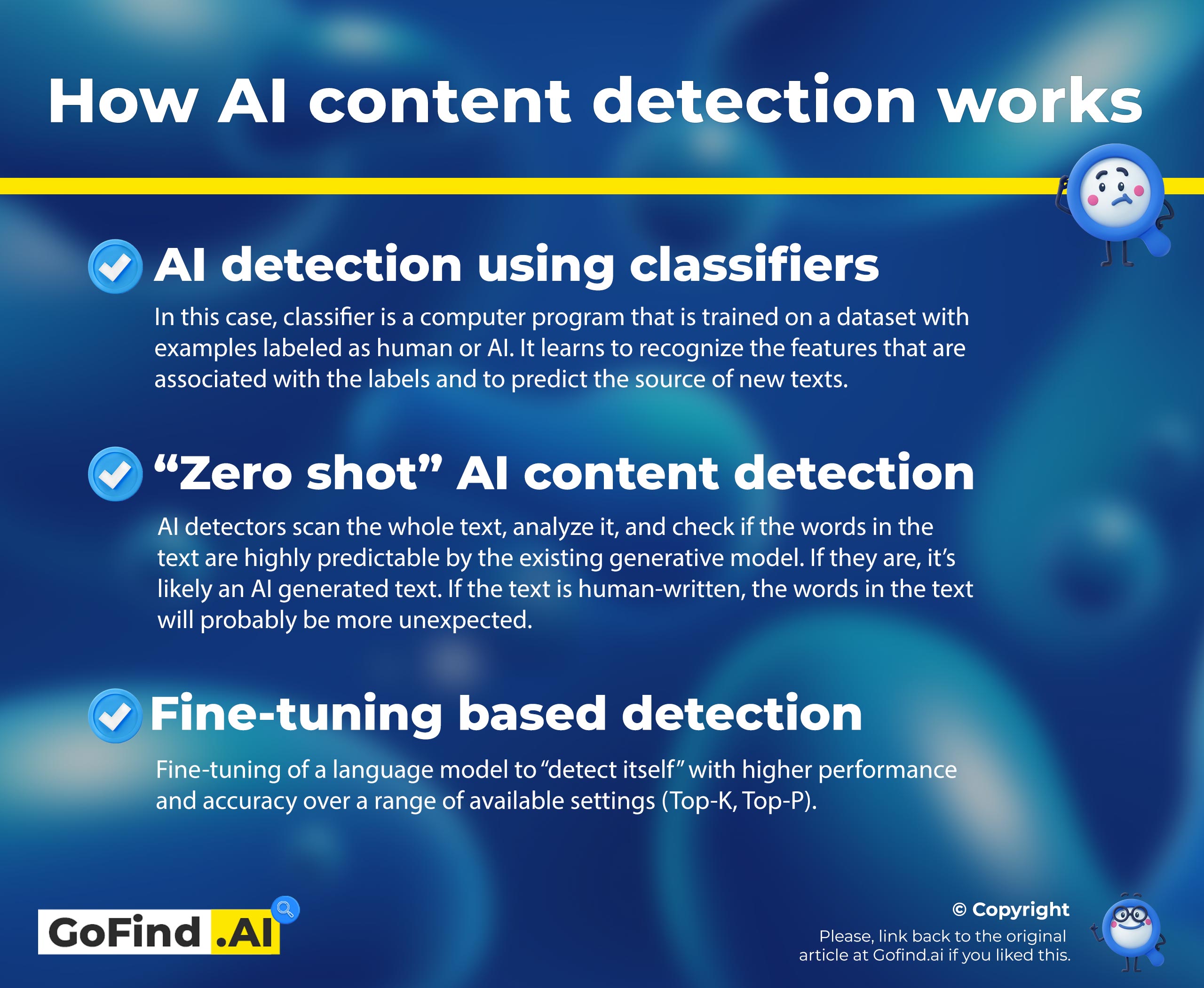

There’s a couple of effective methods to detect AI content:

- Train a classifier model.

- “Zero-shot” detection. Uses the model that generated the text.

- Fine-tuned detection. Same as zero-shot detection, but the generative model gets additional training on both real and AI-generated texts.

Now, let’s get into the details. I’ll try to make everything as simple as possible. 👇

If you don’t want to go deep into the AI detection kitchen, feel free to just skip to the interesting section in the table of contents.

Spoiler: the fun part is the testing section.

AI content detection using a classifier model

We know there are generative language models, like GPT3. But there are also discriminative language models.

Simply put, the difference between them is that generative models are great at producing language, while discriminative models are great at labeling language.

One example of a discriminative model is a classifier.

A classifier model is trained on a dataset with labeled examples. It reads the texts and finds the patterns and the features of the examples. It learns that some labels have those features, and others look different.

So when it sees then a new example, it can confidently say whether it should be labeled A or B.

And if you feed this model enough examples of both real and AI generated texts, it can learn the differences between the two and make a conclusion about a previously unseen example.

I hope it makes sense. If not, I guess you should ask ChatGPT to explain better.

Now, let’s see what the other two methods are.

Zero shot detection using generative models

If you really want to understand how zero shot AI content detection works, you need to understand how AI generates content first. So let me explain:

All popular AI text generators use large language models (or LLM) to generate text. One example of an LLM is GPT3, a model released by OpenAI that most popular apps are powered by, including ChatGPT.

Basically, LLM is a piece of software that’s been fed massive amounts of text to analyze the patterns, and then it’s been trained to predict the next word to a given prompt based on the patterns it learned from the training dataset.

Sounds too complicated, so I asked ChatGPT to rephrase it ELI5-style:

LLM is a computer program that helps you write by guessing the next word you want to say based on what it has learned from reading lots of texts.

Sounds about right, I guess.

Now, if AI can predict the next word based on previous words, it should be able to calculate the probability of a word’s occurrence in a given text, right?

And that’s exactly what AI detectors do:

They ask the language model if a word has a high probability of occurrence in a given text.

Still too complicated? Let me explain this better:

AI detectors scan the whole text, analyze it, and check if the words in the text are highly predictable. If they are, it’s likely an AI generated text. If the text is human-written, the words in the text will probably be more unexpected.

Fine-tuned models detection

It’s the same thing as the previous one, except this time, the models are additionally trained to “detect itself” in texts generated with more diverse settings.

The difference between the first approach and this one is that here you don’t have to train your own model from scratch. You can use large language models like RoBERTa, which is still a generative one, but it’s bi-directional and have a very different architecture than GPT2 or GPT3.

I assume most AI detectors here are using method #3. I’m no expert, though. Feel free to correct me in the comments section. 👇

Can AI content be detected? (Including GPT3 and ChatGPT)

In theory, yes. AI content can be detected. We figured that out in the previous section:

AI writing can be detected by using software that analyzes words’ predictability in a given text or/and by looking for patterns and language features inherent to real/AI-generated texts.

But what if we develop a language model so advanced that it generates texts that are almost indistinguishable from human-written texts?

Or if we max out the randomness settings so that the words in the text would be less predictable? 🤔

Not so sure anymore. And here’s the thing:

The latest AI models, like GPT 3.5, are almost that good. GPT4 is just around the corner, and it is promised to be even more advanced.

After extensive testing of most popular of the available AI detectors, I’ve come to the conclusion that AI content detectors cannot identify AI texts reliably and consistently. Especially if human “operators” have put some thought into the process.

In fact, occasionally, AI detectors may detect human-written text as AI-generated (it happened to one of Seth Godin’s blog posts in one of the tests).

First of all, that happens because some people do write like robots. 😁

And the other reason is that GPT3+ models are really good at mimicking human writing. Especially if the prompts aren’t just simple single-sentence commands. Detectors struggle to tell the difference when the output is that good.

The gap between AI and human written texts is shrinking, and content detectors just can’t keep up.

However, there are some methods and smaller clues that will help you tell AI content from human-written texts.

Let’s see what we can do here:

How to detect AI generated content

There are multiple methods to detect AI generated content:

- Analyze style, punctuation, and other linguistic features manually.

- Do the fact-checking.

- Use AI detection software.

Analyze text manually

If you tried using ChatGPT, you would know that it has its own writing style. It is based on the initial prompt that developers hardcoded into the app (or fine-tuned the model on) and the reduced randomness settings of the chat generator.

The style can help you detect AI writing, as well as a couple of other hints:

- Phrases like “first off,” “in conclusion,” “in terms of,” “when it comes to,” “overall,” and similar ones are very repetitive and don’t sound natural when overused. ChatGPT uses them a lot.

- The basic “linear” structure of the texts. It’s not necessarily a sign of AI, but ChatGPT very often follows a very similar text structure that is often used in academic essays. Excessive use of introduction and transitional words is a big indicator.

I recommend using ChatGPT for a week or so to get used to it. You’ll find it very repetitive, and you probably will be able to spot AI-generated text much easier. - Wikipedia-style generic writing. The dataset that ChatGPT was trained on a dataset that includes the whole of Wikipedia, which is huge. It is often used as a default style for neutral informative texts. And ChatGPT is created to produce exactly that. By the way, I included some Wikipedia texts in the tests for this very reason, and several AI detectors returned false positive results to it.

- Compare the text to the previous work of your student or content writer. It’s very unlikely that a person who used ChatGPT will be able to produce texts in a similar style.

If you’ve never worked with this person before, consider giving him/her a quick test assignment.

One more trick that I discovered works for texts generated with simpler prompts.

Since all AI generated text is literally a sophisticated deterministic autocompletion it tends to use the same points and arguments for a specific prompt.

You can try and take a piece of the original text and paste it into ChatGPT or other AI content generator and use it as a prompt. You’ll often see that the generated text is VERY similar to the original one if it’s auto-generated.

This trick doesn’t work if the initial prompts were advanced and included some original data like specs, dates, names, prices, etc. that a model can’t “predict” without a prompt. Or if the generated text was made iteratively with multiple user inputs.

Do the Fact-checking

At this point, you should’ve learned how AI models like GPT3 or ChatGPT generate texts:

They just predict the next word to the previous text based on the patterns they learned from the training dataset.

It means that AI doesn’t “know” anything. ChatGPT or GPT3 doesn’t use Internet sources when it generates texts. AI doesn’t have a database of knowledge it can refer to, except the “knowledge” that is embedded in the language itself. In other words:

ChatGPT is bad at facts.

AI models are often hallucinating, making up stuff that isn’t real but sounds real. And bots like ChatGPT are always very confident in what they spew out. So it’s not always obvious that the generated text is full of bs unless you’re an expert who knows his/her/their stuff.

And lazy students and content writers rarely do fact-checking. They can’t even do it properly because if they cheated they probably know very little about the subject.

So if you see some nonsense in the essay or the submitted article, chances are it’s AI generated.

Best AI Content Detectors, Tested and Verified

Disclaimer: I know the tests I carried out for this article aren’t scientifically accurate. I’m not claiming that my rating is ultimately correct, either. But for the lack of similar material, I thought it’d be fun and useful to create one.

The easiest way to check if something was written by AI is to use AI content detection tools. The only question is, which tool exactly?

As ChatGPT and AI technology becomes more popular every day, the AI content detection market is growing too. New apps launch regularly.

So I collected the most popular and the most promising AI detectors as of today and tested them all. This table is the result:

I will update this article with new apps in the future. Bookmark it!

For the methodology, refer to the section below.

If you don’t agree with the results, share your findings in the comments, or let’s discuss this on Twitter! Tag me: twitter.com/notaigenerated.

If you want to add new AI content detectors, submit the link and the description using the contact form.

How did I test AI detectors – Methodology

For every app, I made several tests. Three text variations for each. I tested apps on:

- Raw ChatGPT generated output;

- Raw GPT3 DaVinci model output;

- ChatGPT-generated products reviews;

- Raw GPT3 DaVinci model output with the Temperature setting turned up to 1;

- Raw CohereAI generated output;

- AI generated text using custom prompts (learn more about them in the section about bypassing the detectors);

- Texts written with Jasper AI;

- AI written texts with minor human editing (fact-checked, changed phrasing, spelling, punctuation);

- Texts generated in Bing AI Chat;

- Texts labeled as AI written by Red Ventures brands (CNET, Bankrate, etc.) and that one The Guardian article that claimed to be written with GPT3. Probably heavily edited too;

- Wikipedia texts;

- 100% human writing (NYT Wirecutter pre-AI articles, Seth Godin blog, Paul Graham’s article, etc.);

- Real Amazon reviews;

- Random Reddit posts that were long enough for the min threshold.

Some prompts for the test we took from our list of best ChatGPT prompts. Subscribe to get the list!

All generation results should be between 750 and 1500 characters to fit the thresholds and limits of (almost) all AI detectors.

A tool gets 1 point for every successfully passed test.

Sometimes AI detectors weren’t 100% sure about the result or gave a mixed results verdict. I decided to give them 1 point if they guessed the right answer with more than 60% probability. If it’s between 40% and 60%, it’s 0.5 point. If the tool was wrong, it got 0 points.

Some tools didn’t return any probability scores. OpenAI Classifier and GPTZero use ambiguous terms like “Unclear if it is AI written” and “Your text may include parts written by AI“. I didn’t know how to evaluate these verdicts, so I gave them 0.5 points.

The test is 100% honest and transparent. I use affiliate links for some tools, but there’s no bias towards them. The results are 100% verifiable at the moment of writing. All tools are tested on the same set of texts that you can find here.

The results of the tests you can see above or see in this table.

For detailed results, refer to the tools reviews.

Hivemoderation AI Detector – Best Free AI Content Detector

Hivemoderation is the biggest surprise in this test. I noticed this mostly unknown AI detector about a month ago when it was published on Reddit.

At first, I thought it was one of those basic tools that try to jump into the hype train but fail to deliver decent results. Oh, boy, was I wrong.

Hivemoderation is the best AI content detector there is. It successfully passed 43 out of 50 tests and almost never failed in detecting AI generated content.

More importantly, it only failed once in the row of twenty tests with human-written tests!

Just look at its performance:

| AI generated texts detection rate | 85.19% |

| False positives (Human texts identified as AI) | 2.27% |

| Final Accuracy | 91.46% |

How to bypass Hivemoderation detector

Hivemoderation AI detector failed the following tests completely or partially:

- Increased temperature test. It couldn’t detect one text generated by davinci with increased temperature. I think I could make it even more undetectable if I changed the sampling settings.

- Custom prompt test. It detected 2/3 texts generated using custom prompts and techniques.

- Jasper AI. It couldn’t detect 1/3 texts written by Jasper AI.

- Bing AI: passed 2/3 tests.

You can bypass Hivemoderation AI detector if you change the GPT-3 generation settings (Temperature and sampling).

You can also bypass Hivemoderation AI detector with fine-tuned models like Jasper AI or custom made. But there’s no 100% reliable method. You’ll have to test it yourself.

Hivemoderation AI Detector Pricing

The tool is completely free to use as an online web demo.

The company offers its detection model as an API service. I couldn’t find the pricing, but you can contact the sales team.

Hivemoderation AI Detector Verdict

The only downside is the threshold of 750 characters that doesn’t allow testing of the smaller pieces of content. But compared to OpenAI’s, I’d call it absolutely reasonable.

It is a totally production-ready model that I personally would base my business decision on.

Originality.AI Review – Best AI Detection Tool for Publishers

Originality AI is an AI content detection software and plagiarism checker founded by Jon Gillham. Although it’s not 100% reliable and sometimes easy to fool, Originality AI showed great results in the tests and took second place in our rating of the best AI content detection software.

How Does It Work?

According to the homepage, Originality detects AI content using its own model trained to detect GPT-3 texts. They don’t share the exact technology, though.

But anyway, the process of content detection is similar to one of the approaches I described earlier.

Is Originality.ai accurate?

No, Originality.ai is not always accurate. According to my tests, Originality AI is accurate in about 82% of all cases.

It’s a high score compared to other detectors but still unreliable. And if we account for the texts that I deliberately tried to avoid being detected (custom prompts, max temperature, fine-tuned models by Jasper, etc.), the success rate drops to about 66%.

Originality AI also showed an interesting false positive for a human written text.

To be fair, this text confused almost all other detectors, and Originality isn’t an exception. But it still shows you can’t blindly rely on a tool without further investigation.

The text I’m talking about is a 213-word excerpt from an article written by Seth Godin in 2006 called Marketing Morality. Many other AI detectors showed false positive results here too. They all thought it was AI-generated.

I couldn’t replicate the false positives with other texts, though. But as an active user, I can definitely say it’s not 100% reliable.

Spoiler: neither of the detectors is.

Here are the results of the tests for Originality AI:

| AI generated texts detection rate | 66.67% |

| False positives (Human texts identified as AI) | 2.27% |

| Human Texts Accuracy | 97.73% |

| Final Accuracy | 82.20% |

Originality AI Pricing

With Originality AI you pay $0.01 per 100 words of texts. There’s no monthly subscription or any limitations. The app is a pay-as-you-go service, and you only pay for the amount of text you’re processing.

You can add unlimited team members.

You can scan the whole website in a couple of clicks. It’s very useful for the due diligence process if you’re buying a website, for example.

All of your scans are saved in your account, and you can check them later or review your employees’ work in the app.

Originality AI also offers API access for developers. You can build it into your processes. Especially useful for automation and publishing process control.

Originality AI – Is it worth it? Verdict.

I would say it depends.

If you’re a professor checking students’ work, I wouldn’t recommend it. Any AI detector requires additional inspection. Too much is on the table for the student, and you can’t risk his fate by blindly trusting a tool that isn’t 100% reliable.

But if you’re in the content business, you’re hiring copywriters or buying websites, this tool is for you. It’s a great piece of software for automated or strict processes.

It’s good for quick verification when assessing the quality of the articles.

It’s great for large-scale solutions. At 80% reliability for standard ChatGPT and even GPT3 texts, it can be used for mass verification of a large number of articles.

However, keep in mind, that you only can use it for ‘consulting’ purposes. You can’t rely on it 100%, and I wouldn’t recommend making business decisions based solely on the Originality AI scan results. It rejected Seth Godin’s article, may I remind you. Use it as one of the signals in your decision making process.

If you believe Originality AI is right for you and you want to choose the best AI content detector, click here to register an account and start using one of the best tool in the industry.

Zero GPT Detector Review

To avoid further confusion, no, it’s not the same app as a more popularGPTZero.

Zero GPT is a simple AI detection tool that uses some “DeepAnalyse Technology,” according to their website.

The app is free.

Zero GPT showed surprisingly great performance in our tests. In fact, it detected AI generated texts on par with the leader of the rating.

Unfortunately, it failed some tests with human written texts and returned false positive results for 30% of samples.

There’s not much to review because it’s a VERY simple tool with one input field and one button.

For specific cases, refer to other AI detection tools.

So here are the performance results. See for yourself:

| AI generated texts detection rate | 83.33% |

| False positives (Human texts identified as AI) | 30.00% |

| Final Accuracy | 76.67% |

GPT Zero Review: Accuracy and Reliability – Best AI Detector for Academia

GPT Zero made some noise in the media when it was released. It also went viral on Twitter with 7+ million views.

But is it really an antidote to ChatGPT, as they claim in the media? Let’s see if it lives up to its fame!

GPTZero Overview

Edward Tian, made this app as a small side project. But the demand among educators was so high the app crashed several times after the initial launches and first mentions in the media.

Initially, GPTZero was a small demo app built on the Streamlit platform. Today it’s a more usable and definitely more accurate tool.

It takes your input and analyzes the text’s perplexity (randomness). Based on the score, it decides if your text is human or AI written. The lower the perplexity score, the more likely the text is generated by AI.

Sounds very sciency, isn’t it? But how does it actually performs in tests? Let’s see:

GPTZero Accuracy and Performance. GPT Zero Score Explained

GPTZeroX showed very good performance across most of the tests. It finished second and deserves to be called one of the best AI detectors on the market right now!

It did exceptionally great with raw outputs by ChatGPT, GPT3 and Cohere AI.

GPTZeroX was the only tool that got right 2 out of 3 texts written by Jasper AI.

I couldn’t fool it with the custom-prompt texts, either. Three out of three!

I then took it as a challenge and tried to use different prompts for it and finally managed to consistently “fool” it. However, the standard is a standard. It’s a fair test, and I didn’t use these for the final score. I don’t think other detectors could even come close. Except maybe for the #1 tool.

Where GPTZeroX disappointed me a bit is the false positives for human texts. It did fine compared to the rest of the tools, but I expected more from the second best AI detection tool.

GPTZeroX made several mistakes and labeled human-written posts as partly AI generated. To be fair, it didn’t detect the whole texts as AI generated. But the final result claimed they were written by AI, and I just couldn’t count them in as wins.

There are four possible scores from GPTZero and here’s how I interpreted them for the test:

- Your text is likely to be written entirely by AI (= AI)

- Your text may include parts written by AI (= AI)

- Your text is most likely human written, but there are some sentences with low perplexities (= Human)

- Your text is likely to be written entirely by a human (= Human)

Here’s the overall performance of GPTZero in our tests:

| AI generated texts detection rate | 74.07% |

| False positives (Human texts identified as AI) | 20.00% |

| Final Accuracy | 77.04% |

GPTZero Verdict

When using GPTZero, you must understand that neither this nor other tools are 100% reliable for AI generated texts detection.

GPTZero showed great performance at detecting AI generated texts. But but keep in mind it also returned false positive results for 20% of human written texts.

But remember this:

It’s just a benchmark and a tool that should assist, not direct.

GPTZero can become a useful and powerful tool in the arsenal of educators and students if you’re aware of its flaws and learn how to put its strengths to good use.

OpenAI AI Text Classifier

On January 31, 2023, OpenAI released a free AI detection tool simply called AI Text Classifier. It’s a fine-tuned GPT model that predicts how likely the text is to be AI generated.

As OpenAI team explicitly says in their blog post, this tool is not fully reliable. After 50 tests, I’ve found it to be true.

OpenAI AI Text Classifier is accurate only about 50% of the time, with 15% false positives in tests with human written texts.

It performed worse than our top performers and took 5th place in the rating.

Why I didn’t like AI Text Classifier

There are two main reasons:

1. It isn’t accurate. I expected more from the #2 most popular AI company in the world and the authors of the legendary ChatGPT.

With 50% accuracy, according to our tests, it’s basically a random result. Sure, overall performance is above 70%, but can you trust a tool that is wrong 1/3 of the time?

2. It requires a lot of text.

Most texts online are written to be “scanned”, not read. What I mean is they don’t typically written in walls of texts. They have subheadings, lists, tables, etc. The 1000 characters long walls of text that this tool requires aren’t common online.

Sure, you can paste a formatted text and it would return some result, but you should know this:

Most likely, two paragraphs under different subheadings were generated in multiple iterations, making overall perplexity higher and mixing other signals that help detectors do their thing.

That said, AI Text Classifier becomes irrelevant for testing online texts.

Maybe it’s good for school essays or something. I don’t know. But I couldn’t even do proper testing on my set of texts at first. Because some pieces were shorter than the min 1000 characters threshold.

OpenAI AI Text Classifier Verdict

At the moment of writing, OpenAI AI Text Classifier performs slightly above average.

I don’t recommend using this tool for production or making final decisions based on its results. AI Text Classifier isn’t that good yet.

However, the OpenAI team promises to continue the work in this direction. It wouldn’t be fair to give a final verdict without seeing what they can deliver in the nearest future.

Let’s wait and see.

Writer.com AI Content Detector

Although this one often ranks in top Google’s results, it’s not the best AI content detector. Far from it. Here’s why:

Its performance is below average. It recognized AI generated texts in 44% – one of the worst results among all AI detectors.

After extensive testing, I got an impression that the creators of this AI detector wanted to “stay safe” with the results and give as few false positives as possible. And they did it!

However, it resulted in many false negatives, where AI generated content wasn’t detected.

My verdict: Writer.com can’t reliably detect AI generated content. I can’t recommend using it for anything.

OpenAI GPT-2 Detector

Yet another GPT-2 detector from the legends themselves, OpenAI team.

It was created in 2019 as a part of the team’s efforts to measure the social impact of the LLMs and reduce the potential misuse effects in the future.

One of the findings was the fact that a fine-tuned RoBERTa classifier model can be used to identify texts generated by GPT-2. And this detector demo is the result.

So, it’s an old detector that wasn’t even supposed to work for GPT3+ models. But let’s see how it did:

OpenAI GPT-2 Detector Performance

I wasn’t surprised when it showed bad performance in our tests.

However, even OpenAI GPT-2 detector successfully detected the “raw” outputs from ChatGPT and GPT3.

It only does well when the text is at least about 300 words long. I noticed that it fails a lot on shorter texts.

And it failed a bunch of human written tests too. In fact, it failed 30% of tests with human written texts with a final accuracy of 62%. Slightly better than random 😂.

Here’s the detailed performance results for OpenAI GPT-2 detector:

| AI generated texts detection rate | 57.41% |

| False positives (Human texts identified as AI) | 30.00% |

| Final Accuracy | 63.70% |

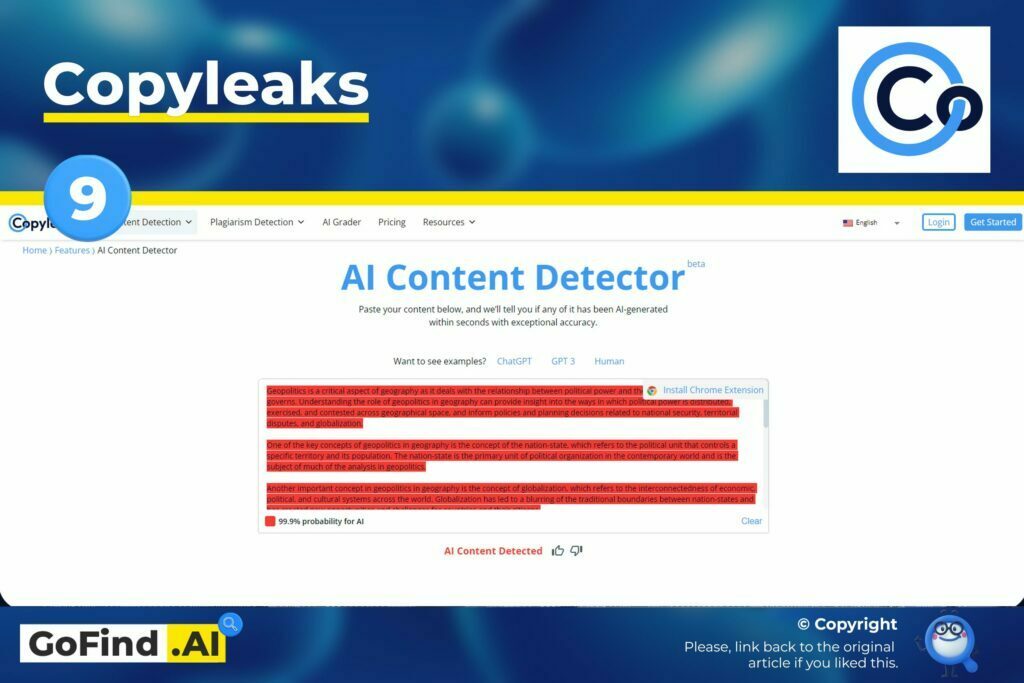

CopyLeaks AI Detector – Is Copyleaks accurate?

Copyleaks AI content detector finished in the fourth group in my performance tests. It performed fine in raw output tests but failed in more sophisticated ones.

Copyleaks is completely free to use. It has very simple interface with one input field and one button for scanning:

After it’s done analyzing, it returns a binary result that shows you whether AI or a human wrote the text.

One of the interesting features is their Chrome extension. It allows for a quick text inspection right from the page. You open the extension, paste the text and get the result right away.

For performance results, see the table below:

| AI generated texts detection rate | 55.56% |

| False positives (Human texts identified as AI) | 30.00% |

| Final Accuracy | 62.78% |

Compared to other AI detectors, it did OK in some tests but completely failed the increased temperature test. It couldn’t detect a single text from the custom prompts and fine-tuned generation tests.

Interestingly, it also identified one of the Wikipedia texts as AI generated. As well as Seth Godin’s excerpt. But I’ve already said that most detectors failed with this one.

Detector by DNG.ai

Another AI detector that showed mixed performance.

It successfully passed the raw output tests and failed the tests with advanced prompts.

To my surprise, it also detected one of the texts written by Jasper AI that only top performers could catch.

But at the same time, it returned two false positives to Wikipedia texts about taxes and SEO.

It scored 28.5 points in the tests and landed the 10th place in the rating.

Obviously, the verdict is “Unreliable.”

See the performance results in the table below:

| AI generated texts detection rate | 50.00% |

| False positives (Human texts identified as AI) | 25.00% |

| Final Accuracy | 62.50% |

GPT Radar

This software was developed by the creators of the Neuraltext AI writing tool. The homepage says that GPT Radar is an experimental tool that uses GPT-3 for detection.

GPT Radar is a simple tool with one input field and a single button.

It requires at least 400 words of text to detect AI content reliably.

You paste the text, click the button, and it will analyze your input and return the result with a detailed explanation.

The tool is similar to GPT Zero and GLTR and uses a similar approach. But the underlying technology is different.

The analysis results include the perplexity score, token probability, token probability distribution, and the final verdict that says whether your text is AI-generated or human-written.

GPT Radar is free for the first several texts, and after that, it charges $0.02 per 125 words.

As for the performance, GPT Radar showed the third worst result on our set of texts.

In its defense, it asks for 400 word for reliable detection and most of the texts didn’t have that.

I don’t recommend using GPT Radar until the creators change something and make it better.

Content at Scale AI Detector Review

Content at Scale (CaS) AI is a free AI detector software from a content production startup of the same name.

CaS showed horrible performance compared to others:

| AI generated texts detection rate | 20.37% |

| False positives (Human texts identified as AI) | 10.00% |

| Final Accuracy | 55.19% |

It did OK detecting ‘raw’ output from CohereAI.

It also did fine on longer ‘raw’ ChatGPT and GPT3 texts but struggled with the shorter ones. I tried testing it on more short texts from GPT3 Playground and ChatGPT, but it failed more than 50% of the time.

The app page claims it’s getting more reliable after 25 words, but what I’ve found is it needs at least 200 words to show some sort of reliability. Which isn’t too much if you’re checking bigger articles, but just something you should be aware of.

The tool has been updated recently and I must say, the update is much worse than the previous version in terms of performance.

With this level of accuracy, I don’t recommend using Content at Scale AI detector for anything.

GLTR

GLTR, or Giant Language model Test Room is an AI writing detecting tool developed by MIT-IBM Watson AI lab and HarvardNLP.

It’s a special tool on this list because I didn’t test it, and it has no test scores. And here’s why:

It doesn’t generate a score of its own or a verdict that would confidently define texts as AI generated or human written.

GLTR was developed as an experiment to discover the possibilities of AI detection using generative language models like GPT-2. And it was meant to detect GPT-2 generated texts. But as their demo page says, it’s not effective against larger language models like GPT3 which makes it practically useless in our tests.

It’s an interesting demo, though, that shows how other AI detectors analyze the text and make their decisions.

How to avoid or bypass AI detection by AI content detection tools like Originality.ai?

If you paid attention to the test results, you’d see that some tests were hard or impossible to crack, even for the best tools.

We proved that it is indeed possible to bypass AI content detection software with a pinch of creativity and human input.

This is because the gap between a human written text and a properly crafted and edited AI-generated text is so tiny, detectors can’t tell the difference.

But how do you create texts of that quality and avoid being detected?

First of all, by actually adding your creative input in the process.

Use the power of your human creativity and think about how you can make AI produce content that would convey your creative thought and your unique style. It’s just a tool, after all, not a magic box that solves all problems.

Use it for inspiration, not for cheating or spam.

Here’s a couple of tactics that will help you avoid being detected by adding human touch to the output:

- Use prompt chains.

How often do you try to use ChatGPT instead of Google? Like, you throw a question, get a quick answer, and that’s it. You get used to this pattern and try to get perfectly written text in one try.

But have you tried having a dialog with ChatGPT? If you’re not happy with the first result, don’t just immediately regenerate the response. Continue “talking” to the model and ask it to add new elements to the initial output.

People expect ChatGPT to be a super-intelligent computer that can answer everything in one take and read their minds 😁. While it’s just a super intelligent auto-complete engine.

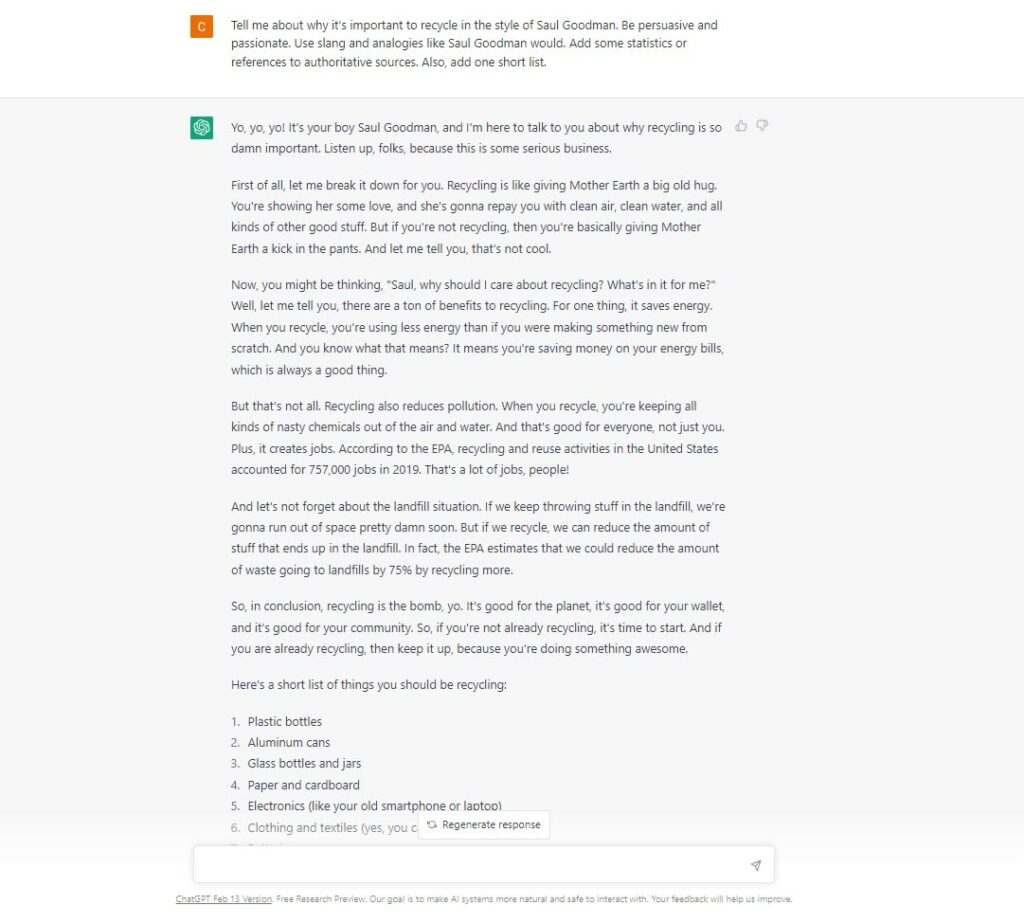

Change its style, tone, and voice.

Add personality by describing “the author’s” character, profession, mood, age, cultural heritage, etc. Maybe use characters for reference. Here’s an example of a passionate Saul Goodman talking about the importance of recycling:

Prompts:

- Tell me about why it’s important to recycle in the style of Saul Goodman. Be persuasive and passionate. Use slang and analogies like Saul Goodman would.

- Explain dividents to a child in the style of gen Z tiktok influencer using lots of slang.

- Use the rewriting technique.

AI detectors work by calculating the probability of word occurrence.

In order to trick it, you gotta put some unexpected words and phrases in unexpected parts of the text and change its default argument flow.

I tried doing it directly in ChatGPT.

I hate to be that guy, but I’ll ask you to subscribe to my newsletter to get the exact prompts I use and the guide that will help you avoid 90% of AI detectors.

Another way is using Quillbot rephrasing tool. It’s not perfect either, but you can try.

- Use GPT3 and increase the Temperature. As simple as that. You might want to regenerate several times until you get the right output.

- Fine-tune your own version of GPT-3 with your custom data.

Remember, AI generates text by predicting the next word it learned from training data.

You can train your own model by feeding your custom text examples into GPT3. The process is called fine-tuning.

GPT3 will learn your style better and imitate your voice as if you wrote the text. It will help you avoid being detected by AI detectors.

Fine-tuned content generation proved to be the hardest to detect. During our tests, finetuned Jasper AI output showed the best “detection resistance.”

Learn more about fine-tuning here.

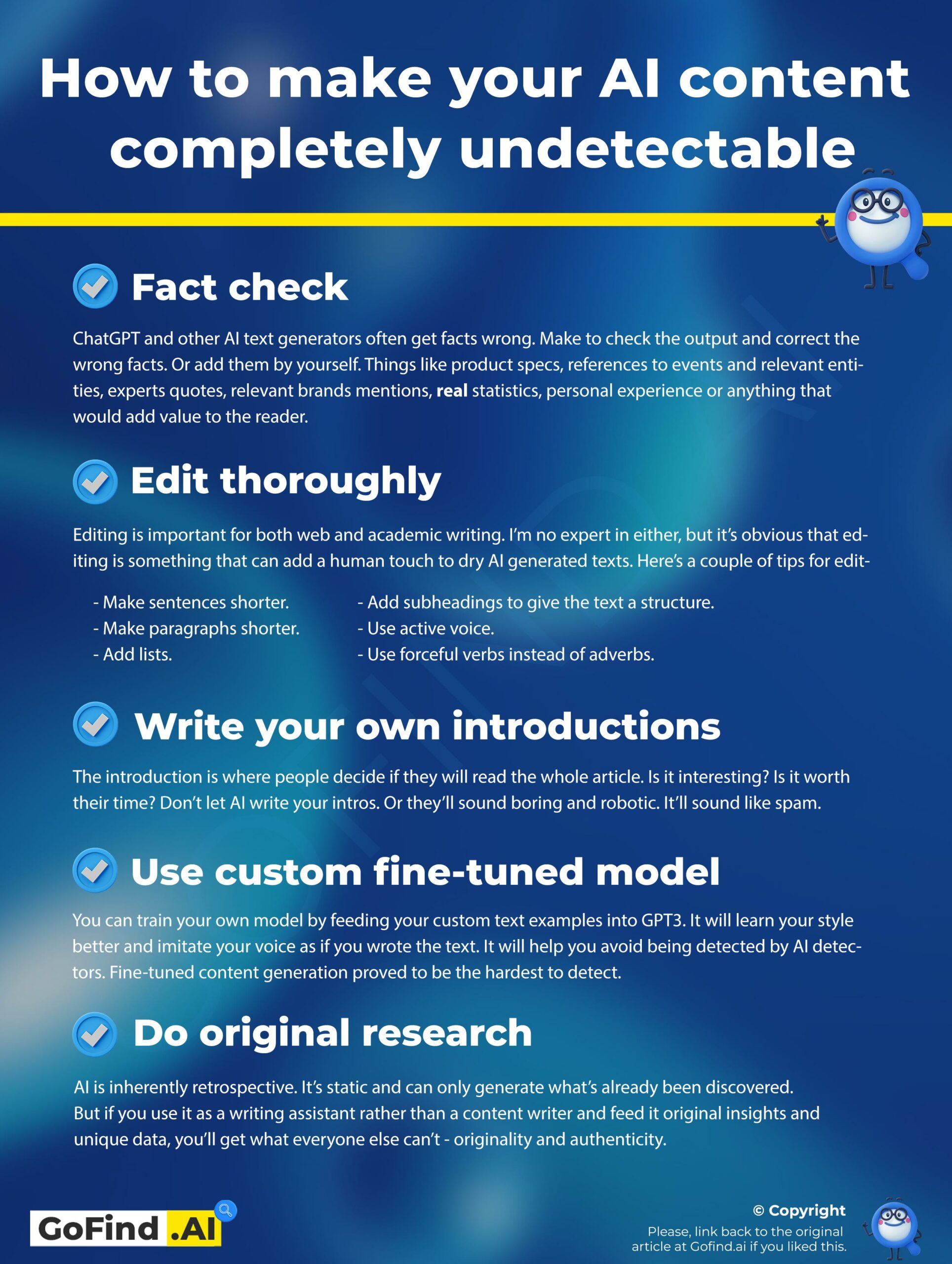

How to make your AI content completely undetectable

If you want to make your work completely undetectable by AI detectors, you’ll have to put some work into it. Make your content look real and actually be useful, and you won’t ever have to worry about detectors.

Here’s a couple of ways you can add value to your AI generated content:

- Fact check

Made up facts, or the lack thereof, are the plague of ChatGPT and GPT3. Get your facts straight and fact-check the generated output. Or better yet, add them by yourself.

Product specifications, references to events and relevant entities, experts’ quotes, relevant brands mentions, real statistics, personal experience, or really anything that would add value for your reader.

AI generators can’t do that without very specific prompting, so make sure you use this.

- Edit thoroughly

Editing is important for both web and academic writing. I’m no expert in either, but it’s obvious that editing is something that can add a human touch to dry AI generated texts.

Here’s a couple of tips for editing for the web:

- Make sentences shorter.

- Make paragraphs shorter.

- Add lists.

- Add subheadings to give the text a structure.

- Use active voice.

- Use forceful verbs instead of adverbs.

- Etc.

- Use contractions

Contractions make your text sound natural and casual. It helps the conversational flow and makes it sound more human.

- Write your own intro

The introduction is where people decide if they will read the whole article. Is it interesting? Is it worth their time?

Don’t let AI write your intros. Or they’ll sound boring and robotic. It’ll sound like spam.

- Do original research

Well, it’s the ultimate advice for any work, really. Original content and unique insights is what will be even more valuable with the rise of AI generated generic content.

AI is inherently retrospective. It’s static and can only generate what’s already been discovered.

But if you use it as a language processor and a writing assistant rather than a content writer, you’ll get what everyone else can’t – originality and authenticity.

Frequently asked questions

Can AI detectors detect ChatGPT chatbot?

Yes, AI detectors can detect ChatGPT generated text. In order to bypass detecting you need to work on the output, edit it, fact check, and rephrase in your own words.

Is AI content detection accurate?

AI content detectors aren’t always accurate and may return wrong or false positive results. Even the best AI content detector can’t work with 100% reliability.

How AI content detection tools work?

There are multiple approaches to AI content detection. Most AI content detectors work by analyzing the word occurrence probability or by training a classifier model.